Candidate Generation

Candidate Generation

The reason candidate generation is so useful, is because it typically allows us to reduce our search space from the entire corpus, typically petabytes in size, to a searchable susbscape of typically hundreds or thousands of items

Overall it's goal is to reduce the search space while still keeping all true positives

It's also done relatively quickly, ideally in a way where you can index / lookup a matrix or user history in a relatively fast fashion, on services that have been updated in the background by our web servers. Basically, you are hoping that as users use our service their item and user history have been being updated and sent to our search systems in real-time, and when you need this information our architecture is setup to be real-time responsive

In Candidate Generation, Queries are Users and you compare them to Items

User-Item Matrices

Our Embeddings concept paper discusses this in detail, but for all of our systems you generally have 2 key components - queries (users) and items

Over time users engage with items (which might be other users!), and this is a scorable numeric metric - they either rank them (movies), watch them (Youtube video watch time), read them (Newspaper post read through length), or engage with them (check another users page)

These co-occurrence matrices showcase how our users (queries) interact / are related to our Items

For all intents and purposes, the matrix defined of our users and how they engage with items is perfectly fine to use as our embeddings. In the below example, let's say it's users video watch time, user 1 engages with item 1 for 90 minutes

Or maybe you can make it percentage of video watched through (it would be some numeric feature standardization) which would bring each number into the standardized range of

With this setup you have now projected our Users and Items into an Embedding Space where defines the total number of videos in our corpus. Since is probably humongous, you need to somehow filter this down

The usual idea is to split this into two separate matrices where to ultimately reduce the total size of our search space while preserving information - this is Matrix Factorization which you will see later!

Similarity

If you want to find similar users, you have a search function where has a query and compares it to each other embedding in our embedding space to find similar vectors , which may be Users or Items. This allows us some flexibility in "find similar users / items to user Q" and then you can use ScaNN (Scalable Nearest Neighbors) to find the Top K nearest vectors

Our Vector Similarity Scoring can be anything from Cosine to Dot products, but for this example if you normalize all of the vectors down to you should be able to use either.

In any other example, a popular video tends to coincide with larger norms, and so our recommendation would probably favor those videos with the Dot product

Filtering and Factorization

Filtering leverages the idea that users who have agreed in the past will agree in the future, based on user similarity or item similarity

In either way you can use embedding matrices for Queries (Users) or Items , or a combination of them. Similarity between items is calculated using metrics such as cosine similarity, Pearson correlation, or Jaccard index

To find recommendations, you compare the query (User embedding) to all Item embeddings using similarity metrics (e.g., dot product, cosine similarity), which will ultimately give you the top Items for a User

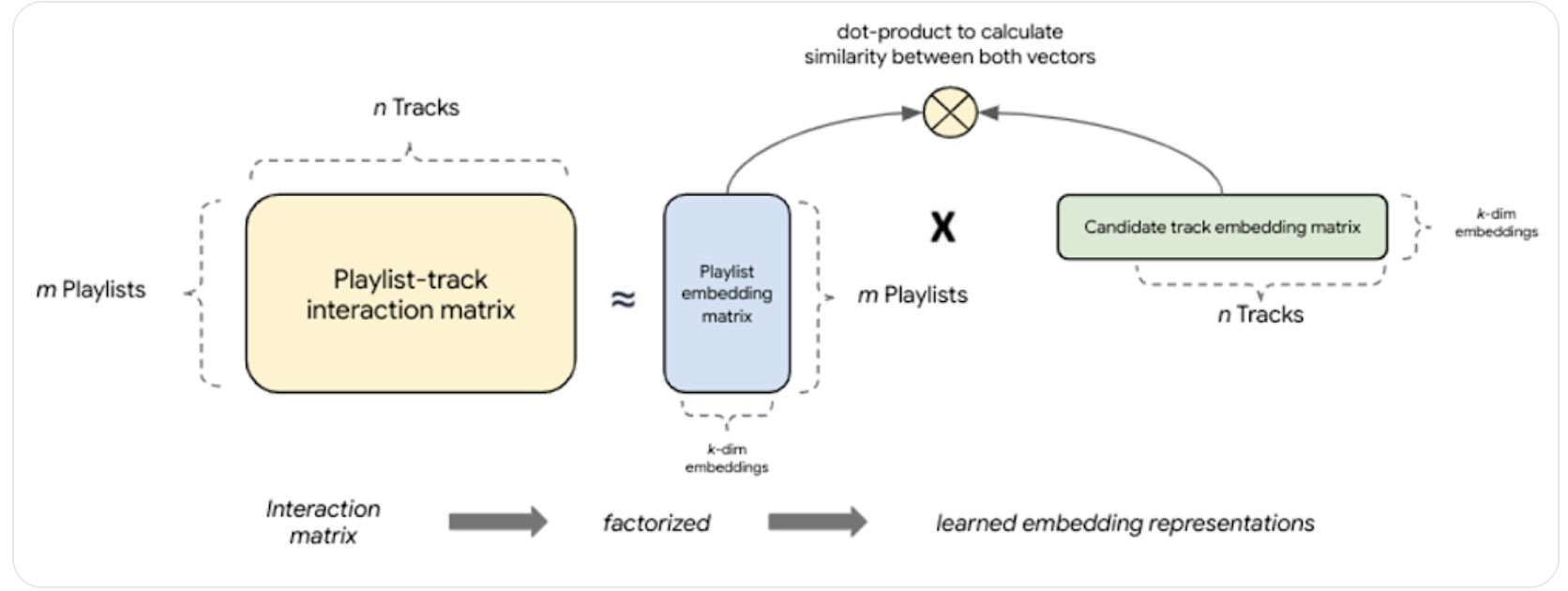

This example from Google shows how if you have Playlists who have songs you can use this co-occurrence matrix, factorize it, and then find similar Playlists. Factorizing these into dimensions allows us to find the Top-K Latent Features of each playlist, and ultimately to compare it to other playlists using geometric vector distance.

This is similar to factorizing a User x Item matrix, and then doing a dot product with items to find what ones would be useful to User. It's a bit odd because we're saying you can compare Track Embeddings to Playlist Embeddings, but ultimately we're calculating the interaction score / goodness score of adding a new Track to a Playlist

Item Content Filtering

- Recommends items to a user based on the similarity between items

- If each of our items has some generic features that describe it, similar to our User-Item matrices you can just host all of these features in a matrix and consider it our Item Embeddings

- If you have a binary set of features, then the Dot product over them is basically a count of the number of similar features!

- If you have a range of numeric values then our Embedding similarity metrics will help us calculate the score between items based on similar dimensions

For content based filtering, common evaluation metrics are based on similarities of items, such as Cosine distance of item vectors

| Pros | Cons |

|---|---|

| Don't need user information for items | |

| Can compute quickly, and in a distributed manner | Matrix has a large memory footprint |

User-Item Collaborative Filtering

Collaborative Filtering allows us to use Users and Items at the same time!. It recommends items to user A based on the preferences / history of similar user B

In collaborative filtering, prediction and classification metrics like precision and recall are more common versus straight similarity metrics

Idea Behind Collaborative Filtering

- Desire is to predict user preferences based on previous history

- Set of users

- Set of items that are to be recommended to

- Learn a function, based on the user’s past interaction data which predicts the likeliness of item to

- Essentially you pull all user interactions, and you start to find users who have interacted in the same way with past items

- Compare to , and then if they are similar for some historic items, you can assume that and might have the same preferences

- If that's the case and enjoyed an item that hasn't seen yet, you should offer it to user

- How is this done?

- you need to be able to find K Nearest Neighbors i.e. (K nearest Users!) of a user which could be based on:

- User-Item relations:

- Item rankings

- Item usages

- Item purchases

- etc...

- User relations:

- User location

- User demographic

- etc...

- User-Item relations:

- Once you have some sort of way to categorize these users, you can start to rank nearest neighbors

- you need to be able to find K Nearest Neighbors i.e. (K nearest Users!) of a user which could be based on:

- Essentially you pull all user interactions, and you start to find users who have interacted in the same way with past items

| Pros | Cons |

|---|---|

| Able to introduce users to new items | Cold start problem for new items |

| Clean interface / interaction | Sparse datasets that take up a lot of memory |

| Can randomly seed user with user demographics | Popular items get a disproportionate amount of attention |

Collaborative Filtering Input

Typically has a User-Item Matrix where Users are rows and Items are columns The below matrix is our embeddings, and as far as this page is concerned, that's it!

- Where:

- is the user-item matrix

- represents the interaction between user and item

- is the number of users

- is the number of items

TLDR;

- Raw Data:

- You start with user-item interaction data (e.g., user watched video, user rated movie, user clicked product).

- User-Item Matrix ():

- Rows = Users

- Columns = Items

- Entries = Interaction value (e.g., rating, watch time, click, etc.)

- Example: is the interaction between user and item

End Goal

After "doing something" with our input here, we'd like to be able to predict which items a user will like / interact with next

Our end goal is to "fill in" the User-Item interactions that currently do not exist, i.e. you want to assign values to all that do not exist

Collaborative Filtering Algorithm

The actual algorithm would just involve taking that matrix and doing Cosine, Jaccard, or basic dot product similarity between the rows to get similar users, and then retrieving the Top K entries in that were not in to find all of the new recommendations for

In the table below you see that User 1 is our Query, or the User we'd like to create recommendations for, and our current scope is top 2 recommendations

The 2 most similar are Users 2, and 5, (which you could find with a Vector Similarity metric), and that User 1 has not interacted with Item B, which both other similar users have ranked as Good=1, so you can recommend Item B to User 1

| User | Item A | Item B | Item C | Item D | Item E | Item F |

|---|---|---|---|---|---|---|

| *User 1 (Query) | 1 | NA | 1 | 0 | 0 | 0 |

| User 2 | NA | 1 | 1 | 0 | 0 | 0 |

| User 3 | 0 | 1 | NA | 1 | NA | NA |

| User 4 | NA | 0 | 1 | 1 | 0 | 1 |

| User 5 | 1 | 1 | 1 | NA | 0 | 0 |

Some pseudocode for the actual algorithm to filter a specific user , and find their nearest neighbors:

max_heap = heap()

for other_user in users:

max_heap.push(

struct(

calculate_similarity(user_i, other_user),

user_i,

other_user

)

)

resp = []

for _ in range(k):

resp.append(max_heap.pop())

return(resp)

function find_similar_users(user_item_matrix, target_user, K):

# Step 1: Calculate similarity between target_user and all other users

similarities = []

for user in user_item_matrix:

if user != target_user:

similarity = calculate_similarity(user_item_matrix[target_user], user_item_matrix[user])

similarities.append((user, similarity))

# Step 2: Sort users by similarity in descending order

similarities.sort(key=lambda x: x[1], reverse=True)

# Step 3: Select the top K users with the highest similarity

similar_users = [user for user, similarity in similarities[:K]]

return similar_users

function calculate_similarity(user_vector1, user_vector2):

dot_product = sum(a * b for a, b in zip(user_vector1, user_vector2))

magnitude1 = sqrt(sum(a * a for a in user_vector1))

magnitude2 = sqrt(sum(b * b for b in user_vector2))

if magnitude1 == 0 or magnitude2 == 0:

return 0

return dot_product / (magnitude1 * magnitude2)

Matrix Factorization

Matrix factorization is a technique used in recommender systems to decompose a large matrix into smaller matrices. This technique is particularly useful for collaborative filtering, where the goal is to predict user preferences for items based on past interactions.

For the above discussion on Collaborative Filtering, you noticed one of the Cons was that this was a gigantic matrix and it's difficult to actually run the collaborative filtering algorithm to find similar users...you need a way to get past this

This was brought up before in the User-Item Matrices section above how ideally you can reduce our gigantic matrix into where . This would allow us to compare 2 rows in a much more efficient manner by using dot product of 2 vectors (row and col)

At the end of the day all you want are EMBEDDINGS that allow us to compute User-Item overlap before you go to Ranking!!!

Explanation

-

Decomposition:

- Using the Matrix from our discussion above where the rows represent Users and the columns represent Items

- Google Docs on Matrix Factorization show how it decomposes the original matrix into two lower-dimensional matrices:

- User Matrix (U): Represents latent features of users.

- Item Matrix (V): Represents latent features of items.

- Both of these matrices are based on the dimensions of that get turned into and

- The embeddings learned from decomposing gives us two new matrices, where the dot product gives us an approximation of

- To break this down, it means you can have 2 smaller matrices that you can pick and choose row / column pairs from to get the original matrix, which helps us to speed up queries and reduce memory footprint

- The product of these two matrices approximates the original matrix.

-

Latent Features:

- Latent features, particularly of size capture underlying patterns in the data, such as user preferences and item characteristics.

- These features are not directly observable but are inferred from the interaction data.

-

Optimization:

- The decomposition is typically achieved through optimization techniques that minimize the difference between the original matrix and the product of the two lower-dimensional matrices.

- Common optimization methods include Singular Value Decomposition (SVD) and Alternating Least Squares (ALS).

Formula

Given a user-item interaction matrix , matrix factorization aims to find matrices and such that:

Where:

- is the original user-item interaction matrix.

- is the user matrix with dimensions (where is the number of users and is the number of latent features).

- is the item matrix with dimensions (where is the number of items and is the number of latent features).

First thoughts

you need an objective function:

- The first thought you should have is minimizing the differences between which intuitively means "ensure that is as close to as possible"

- There are some general issues with this:

- you can only sum / observe values that are 1...meaning all of our unobserved values are lost

- This will lead to a model that can't really "learn" and just see's "good" results since it will be minimal loss

- you could treat the unobserved values as 0 to combat this, but that leads to a gigantic sparse matrix

- you can only sum / observe values that are 1...meaning all of our unobserved values are lost

- There are some general issues with this:

- So you know you need to hold the unobserved observations and learn against them, but you know that's a gigantic matrix that you need to somehow solve for

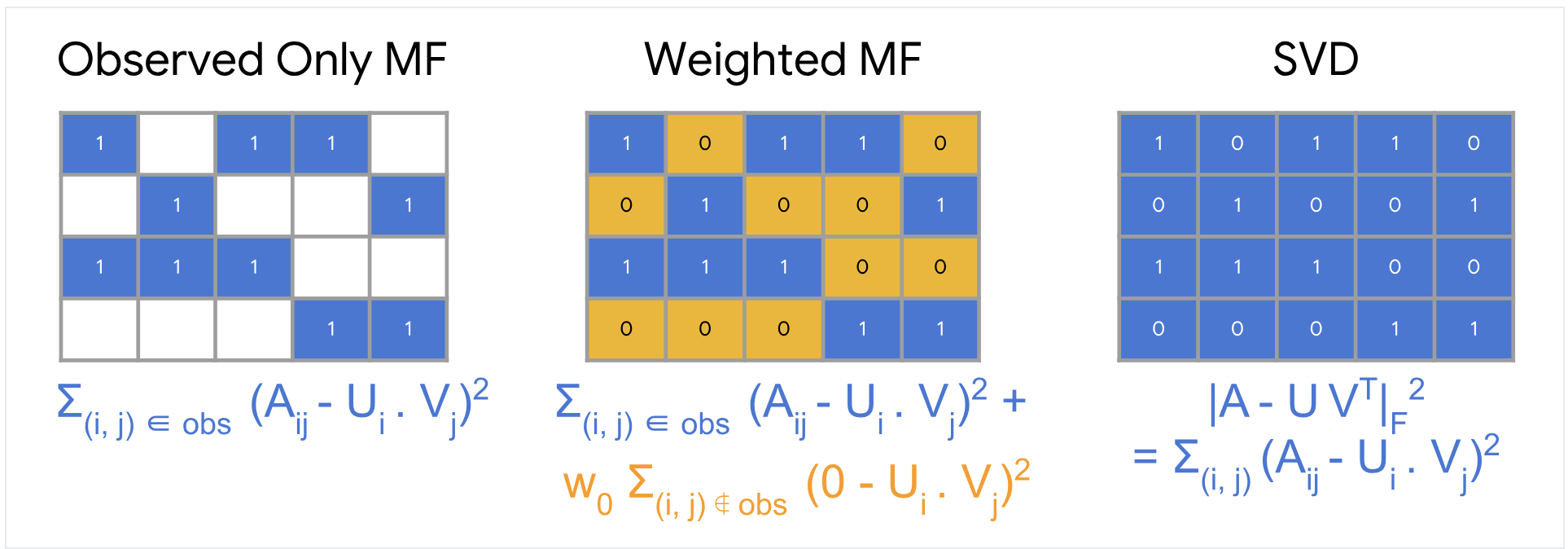

Below you will get into different objective functions, but a good visualization from Google Docs

- Observed only means you only focus on user-item interactions that have occurred

- Weighted will allow us to utilize unseen user-item interactions, weighted to a certain degree

- SVD at the end is how you actually compute "differences" for our objective functions

Singular Value Decomposition (SVD)

Singular Value Decomposition, as shown above, is a way to solve for the 2 types of objective functions you setup - regardless of if you use Weighted or Only Observed

It is mentioned that this is rarely used because the input matrices are so sparse, and that it ultimately isn't worth it most of the time. A more reliable and stable method is Weighted Matrix Factorization

Weighted Matrix Factorization

- Weighted Matrix Factorization (WMF) breaks things up between observed and unobserved observations

- This is helpful because the unobserved observations can be in the range of millions - for all the videos on YouTube, any user probably watches ~100 but there are millions of unobserved ones

- Therefore, WMF allows us to introduce a new hyperparameter, , that helps us to weight those unobserved observations and to reduce the computational complexity of it

- This is useful because it helps us to decompose the objective function into 2 specific sums that are easy to compute over sparse matrices - ultimately the calculation of this matrix is how you create our underlying and matrices from

In the below equation there's a regularization portion not discussed in the Google Docs - it's a useful tool during training time

Where:

- represents the sum over all observed interactions.

- represents the sum over all unobserved interactions.

- is the weight for observed interactions.

- Typically this is a function of the frequency of query - it allows us to reduce the overall effects of frequently visited videos

- is the hyperparameter for unobserved interactions.

- is the regularization parameter.

- Regularization is useful here to help us prevent overfitting by penalizing large values in the user and item matrices

- Another way this is done is by weighting carefully, and disregarding the regularization parameter entirely

Solving for this equation:

- You can use Stochastic Gradient Descent (SGD) or Weighted Alternating Least Squares (WALS)

- SGD is more generic, but is battle tested and true

- WALS is more specific and helpful for this specific objective

- WALS is most likely the ideal function since both matrices you solve for are quadratic

- Each stage can be solved as a linear system, which allows us to distribute the computation across nodes and ultimately converge on finalized matrices and

| Pros | Cons |

|---|---|

| No domain knowledge needed | Cold start problem for new items |

| Battle tested and useful | Not able to add in other side features |

| Good starting point for most rec systems for CG |

- *For cold start problem,

- For items with absolutely no embeddings: Most use cases will just average the embeddings of items in a similar "category" as defined by domain knowledge, and then use that as a starting point to iterate and update

- For a new item or new user with limited interactions, one single iteration of WALS should give us a useful embedding by holding the other category fixed and finding the closest other item given any interactions

Updating Filtering and Factorization Models

Incrementally updating these models would be beneficial for new User-Item interactions that you haven't seen before, it would be ideal if you can update our latent matrices and without having to rerun the entire re-training method

Most of the time in practice you would simply re-train the model every night after we've seen new interactions because retraining isn't that costly

That being said, there are methods to incrementally update these models:

- Fold In

- Incremental Learning

DNN For Candidate Generation

- DNN will allow us to solve the Cons listed above for filtering

- Using side features

- Cold start

- "Freshness" and bypassing "popular only" items via Dot product

- Pretty much everything is the same for creating / fetching the user-item embeddings, but you can also add in other features such as generic user embeddings, user metadata and categorical features, and other account information that might be relevant

- A Softmax Layer will allow us to do multi-class classification, where you basically predict the probability of usage over an entire corpus / corpus subset of videos

- The softmax layer would need to be trained over the entire corpus since any of the videos have a potential to be included in this (sub)set

- Since our output is a probability distribution that's comparable to truth (all 0's and a 1) you can use cross-entropy loss function

- you could also add in other hidden layers and non-linear (ReLU) layers, or anything else, to capture non-linear relationships

- you could also change the entire hidden layers to remove the matrix factorization phase, and use the hidden layers as a way to map user features into a projected embedding layer

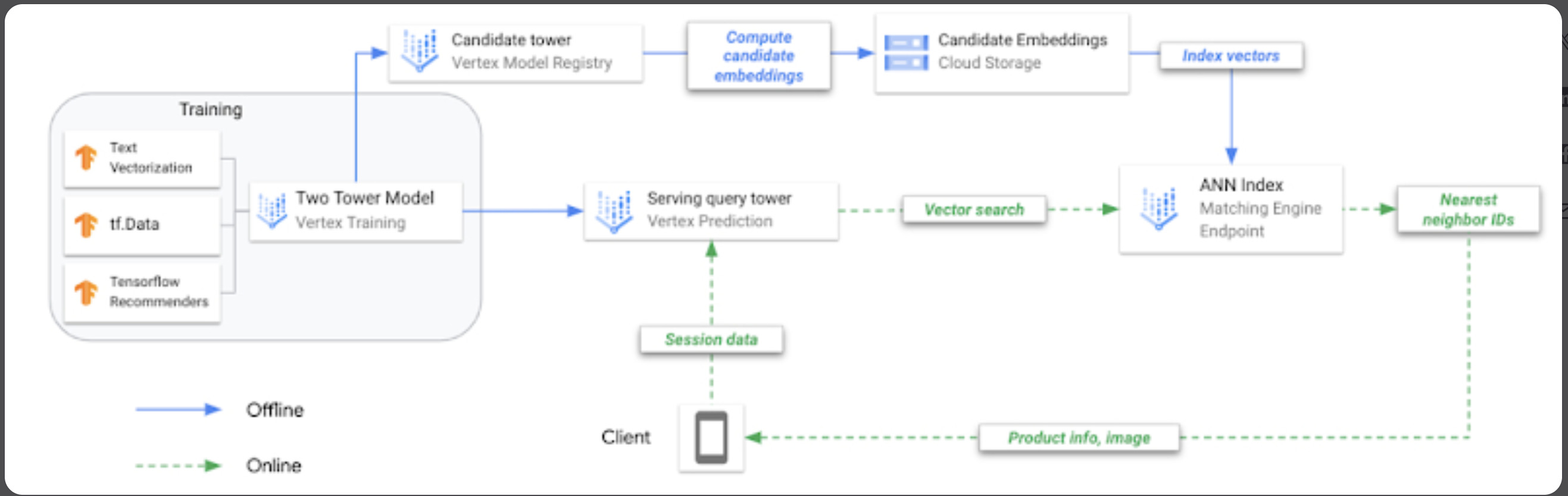

DNN allows us to reduce latency during serving time by decoupling Query Embedding and Item Embedding creation, but you are still using DNN instead of Matrix lookup so overall it might be slower. you can pre-compute Candidates (Items), and then store them in a Vector Type Database for quick lookup

Here's an example of architecture from Google's Blog

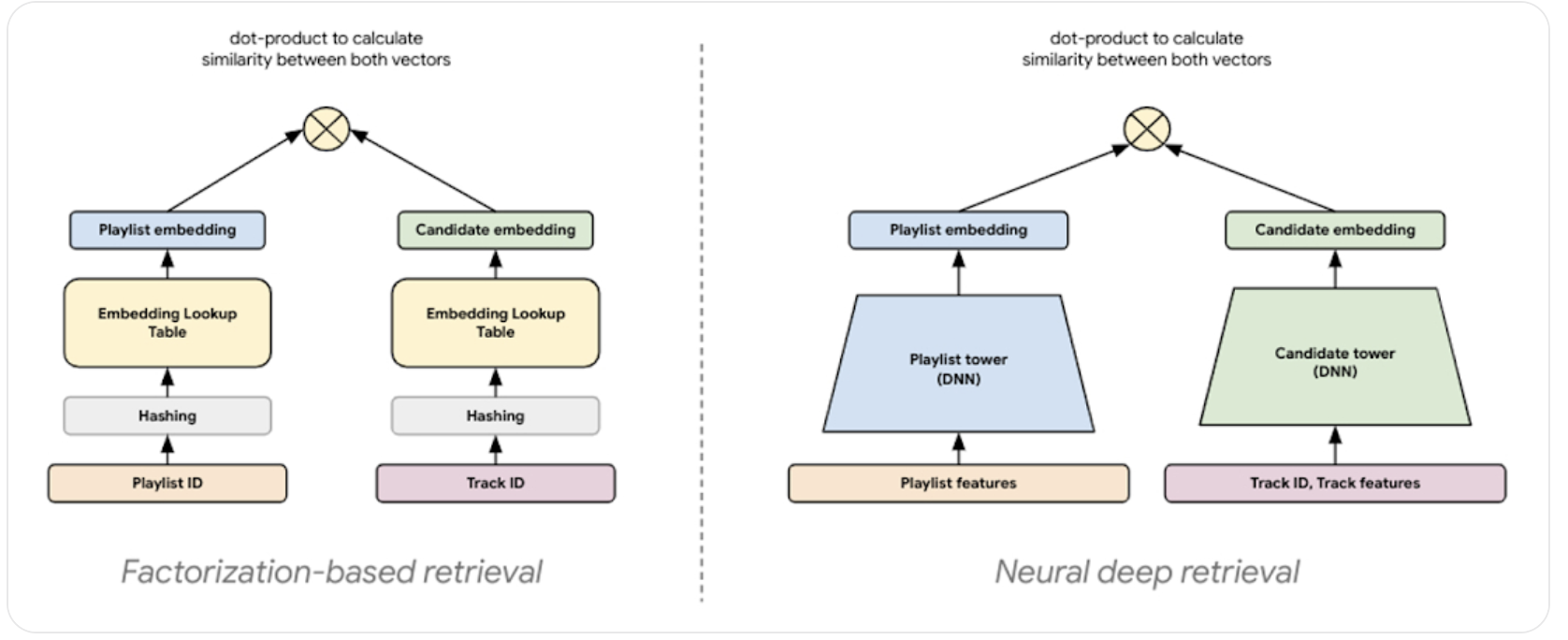

Two Towers

Two Towers will also generate embeddings for users and items, similar to Matrix Factorization, except in this scenario there's one tower for Queries (Users), and one tower for Items. If you ran Two Towers for the same Factorization problem above about Playlist and Tracks it'd look like this

The Two Towers will allow us to create Dynamic, and maybe even attended to embeddings, which is different from static embeddings created via Filtering & Matrix Factorization. At the end to get a recommendation it's a similar option where you compute similarity of Query to all Items (maybe using ScaNN (Scalable Nearest Neighbors)) and find Top K

- Below can all help find an approximate top-k items (from a large pool of item embeddings) for a user:

- SCaNN

- Faiss

- Approx NN

To be clear, in a two tower approach where there's a single item and user output at the end, it's simply to check if those 2 things have affinity, and there are other ways to pull out large scale top-k representations from embedding matrices

This will allow us to bypass the cold start problem, and the static embedding problem, but increases our latency as you need to use another DNN call in our Ranking service

Multi Tasks Learning

The tasks of this model are important, if you strictly focus on "probability of engaging" you might end up recommending click-bait videos, or if you do "time spent watching" it might recommend videos that try to get the user to keep watching long into the videos

Ultimately you want to use simple tasks to find relevant content for the users, and you could use multi-task learning to do so

TODO: Describe multi-task learning outputs and model weight updates using ./other_concepts/MULTITASK_LEARNING.md